Character Updates & Facial Animation – CitizenCon 2018 Summary

Welcome to some more Star Citizen, with a summary of 2 of the CitizenCon Presentations Evolution of Facial Animation, Exploring the Advanced Tech & What a Bunch of Characters, Art & Tech Bring Players & NPCs to Life. Both share some similarities so it seemed sensible to blend them together.

The CitizenCon 2018 | Facial Animation Tech Presentation:

The Presentation went over the improvements made to facial animations & attachments.

The original Bishop Speech was made in Maya and baked into an animation.

The needs of Star Citizen with multiple HQ faces, scenes and them being interactable while they are going on in some situations necessitates a different approach.

They use Runtime Rigging from 3Lateral, literally executing it on the CPU as it is happening.

They can animate & manipulate faces using faceboards, a simple UI for an animator for driving the animation rig.

Everything has moved into the engine now, this has given them some advantages.

Lightweight Skeleton – everything is generated on the fly, actually reducing the amount of work needed for the process by around 70%.

More efficient Animation Compression, reducing data size by around 80%. It’s all lossless too.

CPU Performance – They have doubled the speed of offline rigging with 10 heads updated every / 1ms.

Runtime Animation Retargeting – It allows you to transfer any performance to any other face.

FOIP – Obviously is made possible by retargeting and this runtime rigging.

PCAP Transformation – This allows NPCs to look at a player where ever they are in an interactive scene and change their performance accordingly.

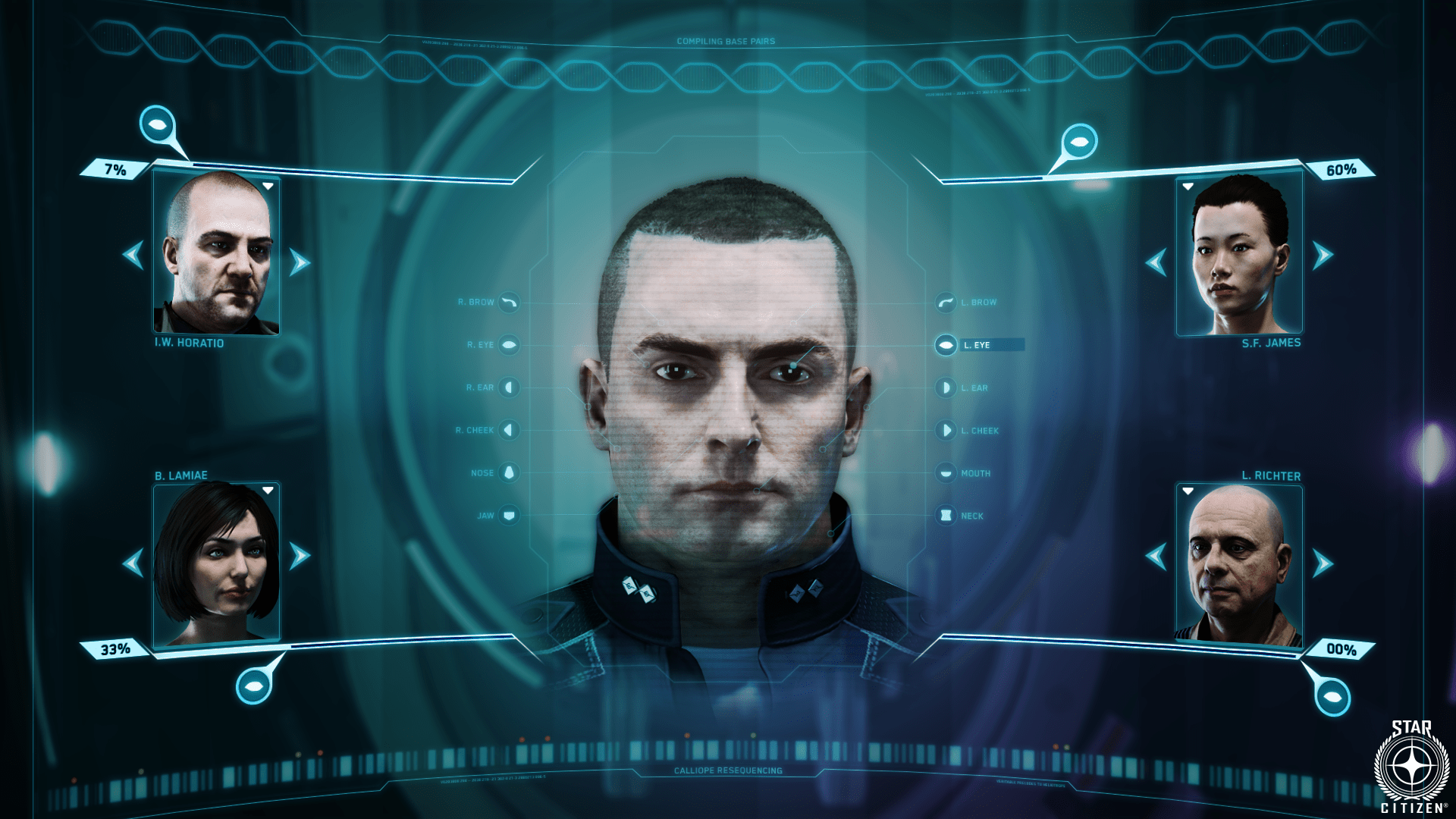

Gene Splicing is still being worked on, they have DNA files that contain loads of facial data.

You can use this to build a character, also they could have asymmetrical faces.

This allows for incredibly unique realistic faces from various parts or by merging various faces.

They can change the shape and position of the skull, eyes, teeth, tongue, skin weight & blend shapes.

The rig logic will work with any face you make.

They had issues with the attachment problem, if you could change the size of the head and where the eyes and ears were then how do you have helmet,s hats, sunglasses, facial hair, without massive clipping issues.

They came up with wrap deformers, beards, hair, hats and other elestatic attachments morph and align appropriately to any sized head & shaped face.

The system takes rigid attachments like glasses, helmets, brimmed hats and scales them rather than giving them a more rubber morphing.

They can have items that have both elastic and rigid components, they are still working on this currently. They want a fully automatic approach tho & need to optimize it to render many on screen characters at high frame rates.

Tho they have a few entirely artist made faces with the actors they used in SQ42, a (and I hate to use the term) near infinite amount of faces can be made & generated.

The DNA (so the custom head and face info) is encoded in 48 hex numbers, this small amount of data is sent to the server in multiplayer.

This enables them to fill a universe with unique, high quality digital humans and aliens.

There are no background characters, the system is super efficient & gives huge realistic customization options to players and NPCs, basically no duplicate character or NPCs in the future.

They currently have 122+ heads in their database, a good mount of which have no been revealed yet and were recorded for SQ42. The more heads the more variety in the genepool.

They are going to scale the LODs based on distance, they use the GPU in an efficient manner & they are going to implement Vulkan API for better performance.

They are currently making the UI for the DNA Character Customizer, having DNA info persist in a database, porting all existing attachments, replacing all the legacy NPCs with the non-identical ones.

Currently slated for 3.5 & it will also have female character customization too.

They will continue adding to the genepool.

CitizenCon 2018 | What a Bunch of Characters

The Presentation looked at the character pipeline from Project Selection, Narrative Design, Concept, High Poly, Low Poly, Materials, Rig / Simulation & Items and loadouts.

Some of this shares what what said in the Facial animation Presentation, so I’ll at least partially omit some pieces.

Characters are needed to be believable, they don’t want duplicate or background characters & they want them as high detail as possible. All characters can be interacted with face to face.

Uniforms, clothing, armor and attachments are shared between characters.

SQ42 has over 250 speaking characters.

Characters need to be able to have different costumes based on the story arc and what the character is doing.

The PU needs full customization of player characters and gear as well as having NPCs themed to the area they are residing as well as modular assets for any given need.

The needs for the PU are more about clothing, assets & customization for their character.

There are NPCs that have a more important role as mission givers that require theming and hero work, but typically the PUs needs are more generic.

Models get created in high poly then made more efficient going into a low poly model that we will use in game.

They have a load of materials in their library to use for various characters, costumes and armor that form layers.

Vanduul armor was shown off and looks amazing, it uses 5 materials blended together.

They can have as many layers and blends as they want without any performance hit.

They can add shine or reflection, cloth fraying, waxiness on the materials too.

They’ve been improving skin and wrinkles making it more realistic.

Skin pores are mapped so they do not reflect light even.

When compared the differences between the models now and 2 years ago are huge.

They use hair cards for hair which is the industry standard way of doing hair (basically a blocks of areas defined as a few hundreds or thousands of hairs that then need to be placed in appropriate areas) BUT They’ve made improvements to hair by having hair fade into itself better, made it less obvious where it intersects with the scalp, added per pixel variation and TSAA / Dithing.

They are actually working on a potentially better approach, they want to have individual hairs done via splines, then have the engine convert that to polygons. The quality of this would be amazing, it also saves them time. One of the problems of this is it is very resource hungry currently.

The standard human male skeleton in game have 170 joints.

But they have different skeletons, vanduul, banu, females

These skeletons are skinned, weighted with range of motion in mind & then rigged and simulated to make a player model.

Their Simulation uses the physics system to make the characters look more real without bespoke work, it’s also referred to as runtime rigging. No specific additional animations are required for clothing that is hanging, bunched up or for prosthetics.

Items and item ports on characters work in the same ways as ships items and ports.

There is a hierarchy of these ports which attach to each other and go on to of each other.

They cull layers that are under another layer that are not visible.

They then set the loadouts of any given character. On the dev side this includes adding, head, eyes, body. For the player, they customize their item loadouts in game as they wish.

They are in the future going to be working on:

Aliens Races, Creatures (they will be adding life to Hurston), Body Variation (not everyone will be mega buff), Procedural Characters so the full creation and loadout of NPCs is automated, Gene Splicing & even cyber / prosthetic limbs.

I hope that was interesting and informative, I’ll leave the links to the full presentations below if you are so inclined.